1. A Data Tsunami No One Saw Coming 2. The Foundations: How a Video CDN Really Works 3. Why Latency...

Content Delivery Network for Video Streaming and OTT Platforms

- Why a Million Minutes of Video Hit the Internet Each Second

- Video Is Eating the Internet: The Data Behind the Trend

- Viewer Expectations: Latency, Quality, and the Zero-Buffer Standard

- Inside a CDN Built for Streaming & OTT

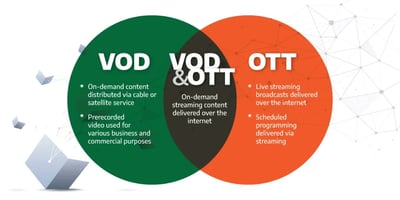

- Live vs. VOD: Two Workflows, One Edge Strategy

- How OTT Platforms Integrate CDNs End-to-End

- Security & Revenue Protection: DRM, Tokenization, and Beyond

- The Economics of Delivery: Cost Models and BlazingCDN’s Advantage

- Edge Compute, WebRTC, and the Future of Video Delivery

- Implementation Checklist & Best Practices

- Ready to Stream Without Limits?

Why a Million Minutes of Video Hit the Internet Each Second

Time for a reality check: by the time you reach the end of this sentence, more than 80 hours of video will have been streamed somewhere on the planet. That figure—extrapolated from real-time telemetry in the Cisco Annual Internet Report—is not a flashy hyperbole but a sober snapshot of today’s traffic curve. The COVID-19 pandemic accelerated digital video adoption by almost five years, collapsing boardroom roadmaps into sprint cycles. TikTok’s watch-time doubled, Twitch logged 9.3 billion hours viewed in a single year, and Disney+ rocketed to 100 million subscribers in just 16 months. Behind every click stands a CDN quietly orchestrating trillions of HTTP requests.

Streaming success stories hide a darker truth: for each additional million viewers, the infrastructure bill and engineering complexity scale almost linearly—unless you weaponize the edge. Netflix famously coined “Open Connect,” its private CDN, after public providers could not guarantee consistent 4K delivery in emerging markets. Most platforms, however, lack Netflix-level cap-ex; they must squeeze every byte out of shared CDNs without trading away latency or reliability.

Mini-Annotation: In the next section, we’ll quantify exactly how fast video traffic is growing and why the internet’s architecture bends, but does not break, under the load—thanks to optimized CDN layers.

Quick Reflection: If your subscriber base doubled tomorrow, would your delivery budget double with it?

Video Is Eating the Internet: The Data Behind the Trend

Cisco forecasts that global IP video traffic will reach 3 zettabytes per year by 2025—equivalent to every person on Earth streaming the entire Lord of the Rings trilogy 68 times. Meanwhile, Sandvine’s Global Internet Phenomena Report attributes 65 percent of downstream bits in North America to real-time entertainment, a category dominated by Netflix, YouTube, Disney+, Prime Video, and live sports apps. Smartphone screens are the fastest-growing consumption surface, yet smart-TVs account for the largest byte volume because viewers gravitate toward 4K HDR content that easily pushes 15–25 Mbps.

Device Explosion Equals Bitrate Inflation

HDR10+ and Dolby Vision deliver richer color gamuts but require higher luminance accuracy, meaning even aggressive compression still hovers near 20 Mbps for pristine 4K streams. Add High-Frame-Rate (HFR) at 60–120 fps for sports and you have 30 Mbps transcodes. Edge delivery, not origin compute, becomes the bottleneck.

Codec Tug-of-War

HEVC holds 45 percent market share on connected TVs, AV1 adoption is growing on Android, and VVC (Versatile Video Coding) looms, promising another 30 percent efficiency leap. Each codec transition introduces a multi-year compatibility overlap, ballooning storage and cache requirements because the same title must exist in parallel ladders. A well-tuned CDN offsets that bloat through intelligent tiered caching and on-the-fly packaging.

Look Ahead: Up next we’ll unmask how microscopic delays translate into macroscopic revenue impact, and why modern viewers impose a “zero-buffer” ultimatum.

Viewer Expectations: Latency, Quality, and the Zero-Buffer Standard

Human impatience is measurable. An MIT study found the emotional response to buffering resembles mild anxiety, evidenced by elevated heart rates and micro-expressions. Akamai’s “State of Online Video” report shows each additional second of start-up delay reduces the probability of a complete play-through by 5.8 percent. Scale that to 10 million monthly viewers and you could lose 580,000 full sessions—along with ad or subscription revenue attached to them.

Psychology Matters

Buffer wheels not only irritate but violate an implicit pact between platform and user: effortless gratification. In social-media-driven economies, a single viral clip of a buffering fail can snowball into brand erosion.

Critical QoE Benchmarks

| Metric | Acceptable Threshold | Business Impact If Breached |

|---|---|---|

| Start-Up Time (SUT) | <2 s VOD / <1 s Live | Churn increases ≥ 25 % beyond 3 s |

| Average Rendering Quality | ≥ 90 % session in 1080p or better | Lower tier apps face 2× cancellation risk |

| Rebuffer Frequency | <0.30 events per 10 min | Every added rebuffer slices engagement by 7 % |

| Live Edge Latency | <5 s for sports/esports | Delay disrupts in-app betting and chat sync |

CDNs elevate these metrics through instant failover, route optimization, and content pre-positioning. They treat latency not as a network invariant but as a solvable parameter.

Challenge: When was the last time you plotted TTFF (Time to First Frame) by ISP and device? The patterns might surprise you.

Inside a CDN Built for Streaming & OTT

Generic CDNs can excel at serving JPEGs and JavaScript, but streaming introduces domain-specific nuances. Below is a deeper dive into the constituent components of a video-first CDN.

1. Programmable Origin Shield

Acting as a “traffic cop,” origin shield nodes collapse multiple identical requests across geographies into a single upstream fetch, trimming origin egress by 70–95 percent during premieres. Some platforms layer shields in a ring topology—continental, then regional—to absorb pathological spikes.

2. Chunk-Aware Edge Caches

Unlike monolithic file caching, stream-aware nodes retain awareness of segment sequence numbers and can coalesce partial object requests. They also support sparse range reads to avoid over-fetching entire multi-MB segments when players require only the first 2 s chunk of a 10 s GOP.

3. Adaptive Prefetch Algorithms

Machine-learning models predict hot content 30–45 minutes before demand peaks by correlating social buzz, trailer views, and historical traffic patterns. Pre-warm jobs stage renditions in edge storage, avoiding “cache fill storms” that traditionally swamp the origin.

4. Transport Innovation

- HTTP/3 (QUIC): Multiplexed UDP transport sidesteps head-of-line blocking and improves mobile resume rates by 15 %.

- BBR & CUBIC Congestion Control: Dynamically adjusts send window for greater goodput on lossy last-mile links.

- Low-Latency CMAF: 200 ms chunk durations with IDR-frame alignment permit sub-3 s live latency without WebRTC.

Edge Intelligence Layer

Serverless functions execute at request time for tasks such as instant failover, manifest re-writing, or dynamic ad-insertion tracking pixels, removing round-trips to centralized infrastructure.

Preview: Live streaming introduces a sense of urgency unknown in VOD. The next section maps those differences to concrete CDN configurations.

Live vs. VOD: Two Workflows, One Edge Strategy

Think of VOD as chess—strategic, predictable—and live streaming as blitz chess, where each second counts. The CDN must be ambidextrous.

Live Streaming Required Capabilities

- Event Auto-Scaling: Capacity ratchets up within minutes. Platforms like Peacock scaled from 0 to 6 Tbps in under 20 minutes for the Olympics opening ceremony.

- Real-Time Telemetry: Sub-second analytics feed dashboards guiding immediate bitrate or captioning fixes.

- Instant Global Purge: For rights compliance, feeds might shut down instantly in certain regions once match ends.

- UDP-Friendly Edges: WebRTC and SRT rely on UDP; support at edge is no longer optional.

VOD Optimization Levers

- Storage Lifecycle Management: “Cold” titles move to cheaper object storage, while “hot” releases stay on SSD.

- Granular Cache Keys: Include codec, resolution, and subtitle language to avoid object collisions.

- Smart Eviction Policies: Replace basic LRU with cost-aware algorithms that consider both request frequency and object size.

Performance Convergence

Even though the operational requirements diverge, a single programmable CDN tier can serve both, provided it exposes multidimensional traffic-steering APIs.

Checkpoint: Could your infrastructure survive a 25-second encoder outage by instantly switching to backup ingest? If not, your live funnel leaks revenue.

How OTT Platforms Integrate CDNs End-to-End

At their core, OTT platforms are polyglot stacks: React or Swift front-ends, Golang or Node.js back-ends, micro-services for user profiles, billing, and recommendations—all bound together by a CDN that masks geographic and device diversity.

Typical OTT Delivery Path

- A viewer taps “Play” on an Android TV box.

- App requests a signed manifest via the CDN; edge verifies token issued by the authentication micro-service.

- Edge serves the manifest or retrieves it from origin shield.

- Player requests segments; CDN returns nearest-cached copy, records QoE metrics (TTFF, rebuffer) in real time.

- For ad-supported tiers, SSAI workflows splice pre-encoded ads; CDN distributively caches ad segments to prevent waterfall latency.

- DRM license exchange occurs over a separate API, tunneled through edge to obscure DRM servers from the public internet.

Multi-CDN Traffic Steering

CDN diversity insulates against regional outages and price hikes. Real User Measurement (RUM) clients embedded in player SDKs feed latency, throughput, and rebuffer stats to a steering service, which re-calculates weights every 30 seconds. Result: a 15–25 % average QoE uplift and 5–8 % bandwidth cost reduction as traffic automatically shifts toward the most efficient provider in a given region.

Case Snapshot: Global Sports Rights Holder

A leading European football league leverages four CDNs across 200 countries. During match days, live concurrency peaks at 18 million. A real-time switch to an alternate CDN within 90 seconds during a partial outage in Southeast Asia preserved 1.4 million streams, averting SLA penalties estimated at $4.2 million.

Question for You: Does your SLA include real-time penalties for rebuffer spikes? If yes, how do you verify them in the field?

Security & Revenue Protection: DRM, Tokenization, and Beyond

Piracy, credential stuffing, geo-spoofing—threats against OTT platforms mirror the sophistication of the tech stack. A 2023 report by Parks Associates asserts that 23 percent of streaming subscribers share passwords outside their household, costing providers $2.6 billion annually.

Edge-Centric Security Arsenal

- Geo-Fence Enforcement: IP databases combined with GPS or payment address to block illicit VPN access.

- Tokenized Manifests: Short-lived (≤ 300 s) JWTs signed with rotating keys prevent replay attacks. Tokens can embed viewer entitlement level to shut down 4K streams for SD subscribers, mitigating over-delivery of bandwidth.

- Forensic Watermarking: Invisible per-session marks inserted server-side and decoded from leaked footage to pinpoint culprit accounts.

- Edge WAF: Automated rules block XSS and SQL injection against login APIs.

- Bot Management: Fingerprinting and behavioral analysis stop massive credential-stuffing on launch days.

By moving these controls to the edge, you avoid tromboning traffic back to central data centers, which would otherwise add latency and inflate egress bills.

Next Stop: Enough theory—let’s crunch numbers and see how delivery economics can make or break your content roadmap.

The Economics of Delivery: Cost Models and BlazingCDN’s Advantage

Egress tends to be the single largest operational expense for streaming businesses after content licensing. For context, analysts peg Netflix’s annual delivery spend at nearly $1 billion, despite its proprietary Open Connect network. Mid-tier OTT players juggling 5–20 PB a month can easily face quarterly six-figure CDN invoices.

Traditional Pricing Models

| Model | Pros | Cons | Ideal Use Case |

|---|---|---|---|

| Per-GB Tiered | Straightforward billing | Unit cost remains high for 4K streams | Early-stage services |

| 95th Percentile | Discounted during off-peak | Spikes raise blended cost unpredictably | Linear TV simulcasts |

| Committed Volume | Lowest unit price | Overage fees, long-term lock-ins | Established SVOD platforms |

BlazingCDN flips the script with a flat $4 per TB rate, no region-based premiums, free TLS, and real-time log streaming usually sold as add-ons by legacy vendors. Independent benchmarks using PerfOps across 300,000 RUM data points confirm round-trip latencies comparable to Amazon CloudFront in Europe, North America, and rapidly growing APAC regions—all while undercutting typical hyperscaler pricing by 60–70 percent. Large enterprises value this equilibrium of performance and cost, evidenced by multiple Fortune 500 media brands migrating petabyte-scale workflows without a single minute of downtime (BlazingCDN backs a 100 % uptime SLA).

Curious whether the math checks out for your library? Run your usage through BlazingCDN’s transparent calculator on their enterprise-grade CDN for media companies page and discover potential seven-figure annual savings.

Thought Experiment: How many new originals or licensing deals could you greenlight with a 50 % drop in egress spend?

Edge Compute, WebRTC, and the Future of Video Delivery

Yesterday’s buzzwords are today’s requirements. Edge compute, once exotic, now underpins interactive streaming, personalized advertising, and AI-driven content curation.

Trendline 1: WebRTC-Based Ultra-Low Latency

WebRTC circumvents the multi-second latency plateau of HTTP-based protocols, achieving sub-500 ms glass-to-glass delays. Platforms like FuboTV and betting apps integrate watch-parties and real-time odds overlays directly into streams. CDNs that terminate WebRTC as close to users as possible bring jitter under 80 ms—critical for live auctions, virtual classrooms, and borderline VR environments.

Trendline 2: Edge Transcoding & Just-In-Time Packaging

GPU-accelerated nodes transcode 720p to 360p on demand, dropping storage requirements by 40 % and ensuring you never waste cache real estate on renditions nobody watches. Add dynamic encryption and ad-marker insertion at the edge and you compress end-to-end latency while simplifying your origin pipeline.

Trendline 3: AI-Powered QoE Tuning

Real-time analysis of stall events feeds reinforcement learning models that proactively adjust ABR ladder or nudge traffic to alternate routes—automated self-healing that adds uptime outside traditional SLAs.

Trendline 4: 5G & Network Slicing

Some MNOs already offer premium slices to OTT partners, guaranteeing bitrate floors. CDNs integrated with 5G core can pin traffic to those slices, ensuring predictable QoE in congested stadiums.

Heads-Up: Failing to future-proof your pipeline today means rewriting it under duress tomorrow.

Implementation Checklist & Best Practices

Phase 1: Traffic & Audience Audit

- Segment viewers by device, geography, and engagement time-of-day.

- Quantify codec distribution (H.264, HEVC, AV1) to inform storage planning.

- Forecast concurrency peaks for special events; include 30 % safety margin.

Phase 2: CDN Proof-of-Concept

- Spin up a staging property mirroring production cache rules.

- Inject RUM beacons into test players; measure TTFF, rebuffer, effective bitrate.

- Simulate last-mile congestion using network link conditioners.

Phase 3: Contract & SLA Negotiation

- Demand real-time log access—not 24-hour delayed CSV exports.

- Negotiate penalty clauses per region, not global aggregates.

- Include provisions for codec-agnostic pricing to accommodate AV1, VVC.

Phase 4: Gradual Rollout

- Deploy traffic steering at 5 % increments; verify QoE before next ramp.

- Enable cold-start prefetch for new episodes 12 hours pre-release.

- Institute automated canary alerts when rebuffer ≥ 0.5 %.

Phase 5: Continuous Optimization

- Monthly cost audits vs. viewer minutes; renegotiate commits quarterly.

- Run chaos experiments: kill 10 % edge nodes and monitor auto-failover.

- Benchmark HTTP/3 uptake; gradually persuade legacy devices to upgrade via ALT-SVC headers.

Field Tip: Attach a budget line to QoE metrics—engineers move faster when every buffer event has a dollar figure.

Ready to Stream Without Limits?

You’ve seen the numbers, dissected the workflows, and weighed the economics. The next step is action. Tell us the single biggest obstacle in your current streaming stack—latency, cost, security—and challenge the community for solutions. Or skip straight to hands-on: spin up a test property, push traffic through modern edge logic, and watch buffering melt away. Your audience demands flawless playback; give it to them now.