Standing at the Crossroads: Service CDN vs Edge Compute – What’s Right for Your Business? Imagine...

Edge Functions in CDNs: How Serverless at the Edge Is Changing Content Delivery

A 100‑millisecond delay in page load time can drop conversion rates by up to 7% according to Akamai’s State of Online Retail Performance report — and that was before modern users got even less patient and more mobile-first.

When every millisecond can mean lost revenue, traditional cache-and-fetch CDNs are no longer enough. The new competitive edge is exactly that: the edge itself, now programmable through edge functions and serverless at the edge. Instead of just caching static files, your CDN can run logic closer to users than your origin ever could.

This shift is quietly rewriting how high-traffic platforms stream video, secure APIs, personalize content, and ship new features — often without touching their core backend at all. In this article, we’ll unpack how edge functions in CDNs work, where they shine, and how enterprises can use them to cut costs while delivering faster, smarter experiences worldwide.

From Static Caching to Programmable Edge

Before we can appreciate edge functions, it’s worth remembering what CDNs used to be — and why that model is now under pressure.

CDNs originally solved a single problem: distance. By caching static assets (images, JS, CSS, videos) closer to users, they reduced round trips to centralized data centers. For years, that was enough. Web apps were simpler, pages more static, and user expectations lower.

Then mobile traffic exploded, single‑page apps took over, and experiences became highly personalized: pricing tailored to region, recommendations tuned to behavior, UIs adapted to device and network conditions. The bottleneck shifted from serving bytes to executing logic.

Yet that logic still lived in a handful of origin regions. Even with a CDN in front, every personalized decision — authentication, A/B test, geo‑routing, localization, paywall checks — often meant another trip back to the origin. The result: rising latency, higher origin load, and ballooning infrastructure bills.

Cloud providers and CDNs responded in two waves:

- General serverless in the cloud (AWS Lambda, Azure Functions, Google Cloud Functions) cut ops overhead but kept compute in central regions.

- Serverless at the edge (Cloudflare Workers, Fastly Compute@Edge, Akamai EdgeWorkers, and similar offerings) pushed that compute into the CDN layer itself.

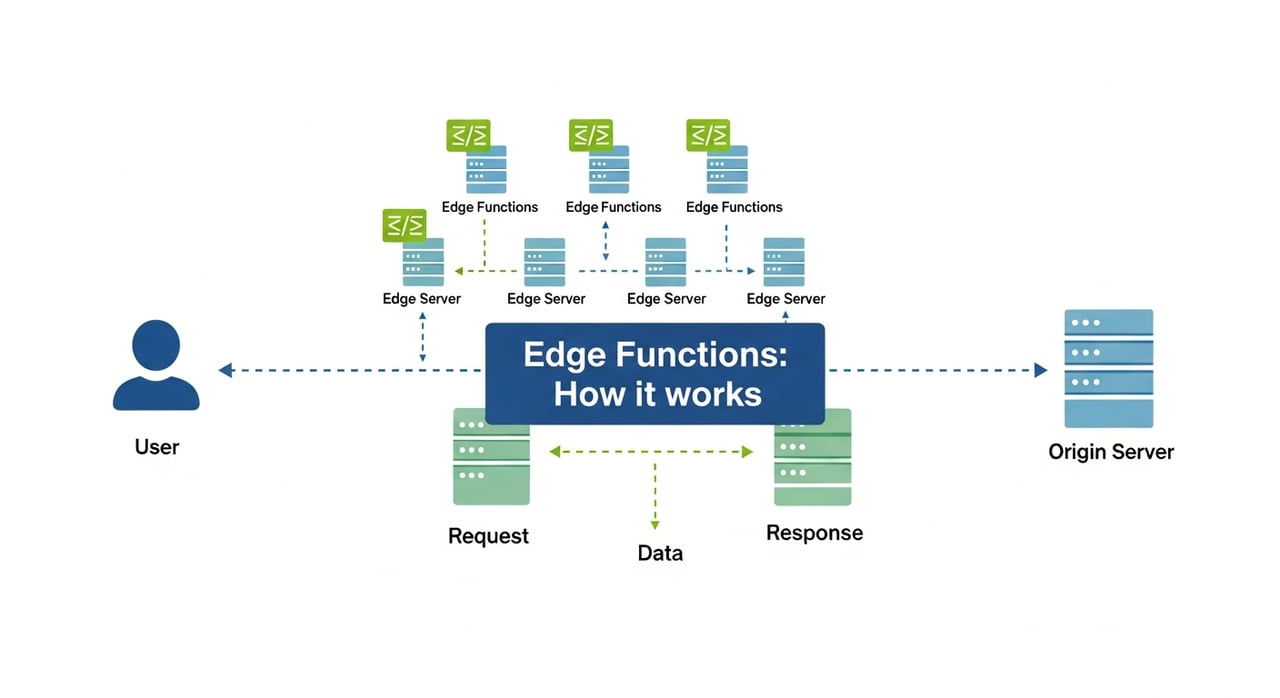

This second wave is where edge functions live: tiny, event‑driven pieces of code running in the CDN path — so close to the user that network distance almost disappears.

As you think about your own stack: how many of your current origin responsibilities are really just lightweight decisions that would be far better off executed at the edge?

What Exactly Are Edge Functions in a CDN?

This section gives you a precise mental model of edge functions so that design and cost discussions stop being vague.

At their core, edge functions are short‑lived, event‑driven programs that run inside a CDN’s infrastructure, typically triggered by HTTP requests and responses. You can think of them as programmable hooks in the delivery pipeline.

Common characteristics across major providers include:

- Event-driven execution: Functions run on events such as request received, response ready, or cache miss.

- Sandboxed environment: Code runs in secure, isolated sandboxes (often V8 isolates or lightweight containers) rather than full VMs.

- Stateless design: No persistent local state; any state must be stored in edge KV stores, distributed caches, or external databases.

- Ultra-low cold start times: Cold starts are measured in single‑digit milliseconds for modern edge runtimes, enabling in‑path logic without user‑visible delays.

- Resource constraints: Tight CPU and memory limits encourage small, focused functions instead of monolithic services.

In practice, edge functions in CDNs enable logic such as:

- Custom routing and load balancing based on user, device, or feature flags.

- On‑the‑fly URL rewriting, redirects, and SEO‑friendly routing.

- Request normalization, header enrichment, and API gateway behavior.

- Personalization (language, currency, content variants) at read time.

- Token validation and auth pre‑checks before traffic reaches the origin.

Unlike traditional origin‑bound serverless, edge functions execute in many locations worldwide, often just a few network hops from the user. That proximity is what makes them transformative for content delivery.

If you mapped your current request flow end‑to‑end, how many network hops and middleware layers stand between your users and the logic deciding what they see?

Why Serverless at the Edge Is So Powerful

Here we connect edge functions to business outcomes: latency, reliability, and new capabilities that simply weren’t practical from centralized data centers.

1. Latency: Shaving Off the Last 100 Milliseconds

Latency has a compounding effect. You feel it not just in initial HTML delivery but in APIs, client‑side hydration, and even analytics beacons. According to Akamai, just a 100 ms delay in load time can reduce conversion rates by 7% in e‑commerce. Google’s Milliseconds Make Millions study found that mobile sites loading one second faster could improve conversion rates significantly for several verticals.

Edge functions attack latency from multiple angles:

- Decision-making near the user: Personalization, routing, feature flags, and redirects execute at the edge, eliminating extra trips to origin.

- Smarter caching: Functions can normalize URLs, strip varying headers, and implement custom cache keys, dramatically improving hit ratios.

- Protocol optimization: Edge logic can enforce HTTP/2 or HTTP/3, adjust cache‑control headers, and optimize response sizes dynamically.

The net effect is faster Time to First Byte (TTFB) and more predictable performance, especially for globally distributed audiences hitting dynamic or semi‑dynamic endpoints.

Where are you still doing micro-decisions such as geo redirects, AB test splits, or minor auth checks on the origin that could be turned into near‑instant edge decisions?

2. Offloading Origins and Simplifying Backends

As traffic grows, origin architectures typically evolve into sprawling clusters: application servers, API gateways, rate limiters, edge routers, and multiple layers of caching. Many of these components exist only because they must be as close as possible to users — but close used to mean in our main region.

With serverless at the edge, a meaningful slice of that logic can live directly in the CDN path:

- URL rewriting and canonicalization that used to happen in Nginx or Envoy.

- Feature gating and rollout logic that used to live in monoliths or API gateways.

- Simple transformations that demanded extra microservices (for example, header munging, JSON re‑shaping, AB test bucketing).

This offload brings three concrete benefits:

- Reduced origin traffic: Fewer requests hit the origin, lowering compute, bandwidth, and database load.

- More resilient architecture: If the origin is degraded, the edge can still serve cached or fallback responses, sometimes even fully satisfying entire user journeys.

- Simpler backend topology: Fewer reverse proxies and layer‑7 middleboxes to maintain, patch, and scale.

Imagine your next capacity‑planning meeting: which services are you over‑provisioning today just to handle peak but trivial traffic that could be answered entirely at the CDN edge?

3. Personalization Without Paying the Latency Tax

One of the classic tensions in web architecture is between performance and personalization. The more tailored an experience is, the harder it is to cache. Traditionally, that led to expensive and slow origin‑driven pages.

Edge functions enable a hybrid model:

- Cache a mostly generic base document or API response.

- Apply light‑weight, user‑specific logic at the edge (for example, currency, language, local inventory, AB variant, logged‑in vs guest view).

For example, major streaming platforms use edge logic to enforce region‑based licensing, select nearby media servers, and choose appropriate bitrate ladders before the first chunk of video is streamed. Global e‑commerce companies localize currency and content, or gate promotions by market, directly in edge code, while still serving the bulk of HTML and assets from cache.

The result is personalization at CDN speed — a middle ground where you keep the benefits of caching while responding intelligently to who the user is and where they are.

If you could personalize three aspects of your experience without slowing pages down, which changes would bring the most revenue or engagement?

4. Security Controls Where They’re Most Effective

While full security stacks involve many layers, edge functions allow teams to implement custom security logic right where requests enter the system:

- Validating JWTs and access tokens before forwarding requests.

- Enforcing IP or region‑based access rules aligned with compliance requirements.

- Normalizing and sanitizing headers and query parameters.

- Rate‑limiting or shaping traffic based on business rules, not just raw IP reputation.

Because this logic runs extremely close to end users, bad traffic can be shaped or rejected early, saving origin resources for legitimate usage.

What security or compliance checks in your stack are effectively boilerplate, repeated across services, and would be cleaner as reusable edge logic instead?

Comparing Architectures: Origin, CDN, and Edge Functions

This section gives you a side‑by‑side comparison so stakeholders can visualize trade‑offs when deciding how far to push logic to the edge.

| Architecture | Where Logic Runs | Latency | Operational Complexity | Typical Use Cases |

|---|---|---|---|---|

| Origin‑centric (no CDN) | Central data centers / cloud regions | Highest, especially for global users | High (you manage everything) | Internal apps, low‑traffic sites, legacy systems |

| Traditional CDN (static caching) | Origins + CDN cache for static assets | Improved for static content; dynamic still origin‑bound | Moderate (cache rules, invalidations) | Media delivery, simple websites, asset offload |

| CDN with Edge Functions | Origins + programmable CDN edge | Lowest for both static and many dynamic decisions | Higher design complexity, lower infra overhead | Global apps, APIs, streaming, dynamic personalization |

Seen through this lens, edge functions are not a replacement for origins but a powerful layer that changes what your origins need to do. They become systems of record and heavy compute engines, while the CDN becomes a programmable delivery and decision fabric around them.

Looking at your roadmap, are you still treating your CDN as a dumb cache, or are you designing features with the assumption that the edge is a first‑class compute tier?

Real-World Edge Function Patterns That Deliver Value

Now we move from theory to patterns you can adopt, many inspired by how large platforms use edge compute to keep experiences fast and resilient.

1. Smart Routing, Geo Control, and Traffic Steering

Modern CDNs use edge functions to decide, in microseconds, where each request should go:

- Geo routing: Send users to the nearest healthy origin region or data center for dynamic workloads.

- Canary and blue/green deployments: Route a percentage of traffic to a new backend version without changing DNS or client code.

- Failover logic: Detect unhealthy origins and seamlessly reroute to backup regions while serving stale‑while‑revalidate content where appropriate.

Large SaaS and streaming platforms rely on this pattern to run continuous deployments and regional migrations with minimal user impact. Instead of baking routing logic into application servers or Kubernetes ingress layers, they move it into the edge so it’s consistent across all clients and channels.

If you could promote or roll back a new backend version just by flipping an edge routing rule, how much release risk and downtime could you remove from your deployment pipeline?

2. A/B Testing and Feature Flags Without Extra Round Trips

A/B tests often introduce subtle performance regressions: client‑side JavaScript waits for a decision, or servers call out to third‑party flag providers. Edge functions can perform experiment assignment and flag evaluation as part of the request lifecycle:

- Assign users to variants based on cookies, IDs, or geographic segments.

- Serve variant‑specific cached versions of pages or assets.

- Emit experiment metadata as headers for analytics or logging systems.

Major experimentation platforms increasingly expose edge SDKs for this reason: the decision belongs where it can be made fastest, not buried in a monolith or client bundle.

How many of your just one more experiment decisions currently add script weight or backend hops that could be removed with edge‑side flag evaluation?

3. API Aggregation and Response Shaping

As microservice architectures proliferate, frontends often need to call multiple APIs to assemble a single page or app view. Without care, this leads to chatty networks and cascading latency.

Edge functions can act as a lightweight API gateway:

- Fan out to multiple backend services in parallel.

- Aggregate and normalize responses into a single JSON payload tailored for the client.

- Cache composed responses with fine‑grained keys (for example, user segment + language + feature flags).

This pattern keeps frontends simple and mobile clients lean, while centralizing cross‑cutting concerns in a place that is globally close to users.

What percentage of your frontend latency is actually due to multiple API calls that could be merged or cached through an edge aggregation layer?

4. SEO, Redirects, and URL Normalization at Scale

SEO teams often need fine‑grained control over redirects, canonical URLs, and header strategies. Implementing these rules directly in application code leads to brittle deployments and complex release coordination.

With edge functions, teams can:

- Implement 301/302 redirects based on pattern matching rather than app logic.

- Normalize legacy URL structures and preserve link equity without touching the origin.

- Adjust cache‑control, hreflang, and other SEO‑critical headers dynamically.

Because changes deploy instantly at the CDN layer, SEO and growth teams can iterate faster and respond to market changes without waiting for full backend releases.

How many redirect or URL mapping rules in your backlog could move out of application code and into a centrally managed edge ruleset?

5. Streaming and Real-Time Experiences

Video platforms, live sports broadcasters, gaming companies, and real‑time collaboration tools care deeply about latency, jitter, and packet loss. Edge compute plays a growing role here as well:

- Selecting optimal streaming origins and transcoders based on instantaneous health and capacity.

- Adjusting manifests and playlists (HLS/DASH) at the edge for device capabilities or network conditions.

- Enforcing concurrency limits and access controls for premium live events.

Real‑time analytics and QoE (Quality of Experience) metrics can also be ingested at the edge, sampled, enriched, and forwarded in aggregated form to central systems, reducing bandwidth and processing costs.

If your next big event drew 10× normal traffic, would your current origin‑centric architecture survive, or would you rather have edge logic absorbing and shaping that surge first?

Design Principles for Successful Edge Function Architectures

Edge compute has its own constraints. This section covers the patterns that let you benefit from the edge without re‑creating monoliths in a new place.

1. Keep Functions Small, Composable, and Stateless

Edge environments favor small, focused units of logic. Resist the temptation to port entire services to the edge. Instead:

- Write single‑purpose functions (for example, geo‑route request, evaluate feature flags, normalize URL).

- Compose them through middleware pipelines or routing rules.

- Avoid in‑function global state beyond simple caches or configuration.

This improves reliability, makes rollbacks safer, and keeps costs predictable.

2. Design for Data Locality and Caching

Because edge functions often run far from your primary databases, they work best with:

- Edge‑friendly data sources such as key‑value stores, configuration services, and precomputed datasets.

- Cached views or materialized projections suitable for read‑heavy workloads.

- Event‑driven pipelines that keep this data fresh with minimal write‑time overhead.

Many teams adopt a configuration at the edge, critical data at the origin split, where only hard personalization (for example, account balances) requires origin access, while soft personalization (for example, content variants) uses edge‑resident data.

3. Observe, Log, and Debug from Day One

Because edge logic is distributed and co‑located with your CDN, debugging production issues can be non‑obvious. Invest early in:

- Structured logging with request IDs shared between edge and origin.

- Per‑region metrics on error rates, latency, and cold starts.

- Replay tooling or shadow environments where you can reproduce edge behavior safely.

Treat edge observability as first‑class; without it, the benefits of distribution can quickly turn into confusion during incidents.

4. Control Blast Radius with Traffic Segmentation

Just as with backend services, rollouts should be gradual:

- Start with shadow traffic or a small percentage of real users.

- Segment by geography, device type, or customer tier for safer experiments.

- Maintain clear and simple rollback paths (for example, toggle a flag to bypass a function).

Edge functions operate at incredible scale — a small bug can impact millions of requests per minute. Guardrails and progressive delivery are non‑negotiable.

Looking at these principles, which ones would require the biggest mindset shift for your current development and operations teams?

Cost, Scale, and the Enterprise Case for Edge Functions

Here we align edge functions with finance and infrastructure strategy: why they can lower total cost of ownership while increasing resilience.

Enterprise leaders often ask a simple question: does moving logic to the edge make things more expensive or less? The honest answer is: it depends on how you design and what you measure — but when implemented thoughtfully, edge functions tend to reduce overall costs.

1. Reducing Origin and Bandwidth Costs

Every request served or shaped at the edge is one that your origin doesn’t have to handle in full. Typical savings come from:

- Higher cache hit ratios: Better normalization and cache key strategies mean fewer cache misses.

- Lower origin compute usage: Simple tasks (redirects, header logic, basic personalization) disappear from origin workloads.

- Optimized payloads: Edge functions can compress, minify, or selectively trim responses, reducing total egress.

For high‑traffic streaming, gaming, and SaaS platforms, even single‑digit percentage improvements in origin offload translate into significant savings on cloud bills.

2. Avoiding Over-Provisioning for Peaks

Traditional architectures require origin capacity to handle peak plus margin — including flash sales, product launches, and major live events. This often means running expensive infrastructure underutilized for most of the year.

With serverless at the edge, much of the burst can be absorbed in a highly elastic layer designed precisely for spiky workloads. Because many edge platforms bill per request and compute unit, you pay directly in proportion to actual usage rather than for idle capacity.

3. Why BlazingCDN Fits Enterprise Edge Strategies

Modern enterprises increasingly look for CDN partners that combine high performance with predictable, transparent pricing. BlazingCDN was built with exactly this in mind: a high‑throughput, fault‑tolerant delivery platform that offers stability and fault tolerance comparable to Amazon CloudFront, but with a dramatically more cost‑effective pricing model.

For organizations delivering large volumes of media, software, or API traffic, BlazingCDN’s starting cost of just $4 per TB ($0.004 per GB) can reduce delivery spend by tens or hundreds of thousands of dollars annually, without compromising on uptime — backed by a 100% uptime track record. This makes it especially attractive for enterprises and corporate clients that need both reliability and aggressive cost optimization.

Companies in sectors like video streaming, online gaming, software distribution, and SaaS can use BlazingCDN to scale quickly for global product launches or seasonal spikes while keeping infrastructure lean and configuration flexible. It’s already recognized as a forward‑thinking choice for organizations that refuse to trade performance for efficiency.

As you evaluate providers, it’s worth exploring how BlazingCDN’s powerful rules engine and flexible delivery options can underpin your own edge‑first roadmap via BlazingCDN's enterprise-ready CDN features.

If finance asked you tomorrow how you plan to cut delivery and origin costs next year, could you show a clear path that includes programmable edge offload as a major lever?

How to Get Started with Edge Functions in Your CDN

This section turns concepts into a practical rollout plan: where to start, how to limit risk, and how to demonstrate quick wins.

Step 1: Audit Your Request Lifecycle

Start by mapping your typical user request from browser or device to response:

- Which services does it pass through (CDN, load balancers, gateways, microservices)?

- Where are simple, repetitive decisions made (redirects, header rewrites, auth checks)?

- Where do you see the most latency and failure risk?

Mark every place where the origin is used primarily for lightweight logic rather than heavy business rules or data access. These are prime candidates for edge migration.

Step 2: Pick Low-Risk, High-Impact Use Cases

Common starter projects include:

- Consolidating complex redirect and rewrite rules into edge functions.

- Implementing geo‑based content or language selection.

- Adding or normalizing security headers consistently across properties.

- Performing simple AB test or feature flag evaluations.

These changes are easy to validate, often reversible with a single configuration switch, and can deliver visible performance or operational wins within days or weeks.

Step 3: Establish Guardrails, Observability, and SLIs

Before rolling out edge logic across all traffic:

- Define key SLIs (latency, error rate, cache hit ratio) and how you’ll monitor them.

- Set rollout strategies (for example, 1% traffic, then 10%, then 50%+).

- Implement robust logging and tracing that lets on‑call engineers quickly see when edge behavior diverges from expectations.

This foundation turns edge functions from an experiment into a dependable part of your production architecture.

Step 4: Integrate Edge Functions into Your SDLC

Edge logic should be treated like any other code:

- Versioned in source control alongside application and infrastructure code.

- Tested with unit, integration, and canary tests.

- Reviewed via standard code review processes.

- Deployed using CI/CD pipelines with clear rollback steps.

The more your teams see edge functions as a first‑class environment rather than an ops‑only configuration layer, the more value you’ll extract.

Step 5: Expand to Strategic Workloads

Once simple patterns are validated, you can move on to higher‑value scenarios:

- API aggregation for critical user journeys.

- Dynamic routing and failover for multi‑region origins.

- Selective static generation and caching strategies for headless and Jamstack architectures.

These deeper integrations are where organizations often see the biggest impact on both performance and cost.

If you picked one small edge function project to deliver in the next 30 days, which use case would both excite your team and convince leadership that edge compute deserves more investment?

Looking Ahead: Edge-Native Experiences and Your Next Move

We close by looking forward — and inviting you to take concrete next steps instead of treating edge functions as a future‑only topic.

Analysts like Gartner have forecast that by 2025, a majority of enterprise‑generated data will be created and processed outside traditional data centers or clouds. That shift isn’t just about IoT sensors or industrial systems; it’s about how every high‑traffic digital experience is architected. Edge‑native patterns — where CDNs, edge functions, and origins form a tightly integrated continuum — are rapidly becoming the default for companies that depend on digital performance as a core competitive advantage.

Whether you’re streaming content to millions, shipping frequent software updates to global users, or running a SaaS platform with strict SLAs, programmable edge capabilities are no longer a nice to have. They’re a way to reduce risk, shrink bills, and unlock features that were previously too slow or complex to ship.

The next move is yours. Map one key user journey, identify the slow or fragile parts, and ask: what if this decision happened at the edge instead? Then start small, ship an edge function that removes a real bottleneck, and measure the impact. Share those numbers with your team — and with leadership.

If you’d like to explore what an edge‑forward CDN strategy could look like for your organization, bring your toughest performance or scaling challenge and start a conversation with your infrastructure, product, and finance stakeholders. Then, compare how different providers address those needs — and how a modern, cost‑efficient platform like BlazingCDN can become the foundation for the next generation of your content delivery stack.

When you’re ready, share this article with your team, bookmark it for your next architecture review, and start drafting your first edge function use case — because the edge isn’t just where content is delivered anymore; it’s where your competitive advantage will increasingly be decided.