Imagine streaming your favourite live sports final, seconds from the decisive goal, only to...

Case Study: Streaming Platform Eliminates 80% of Buffering with a Video CDN

Up to 40% of viewers abandon an online video after the first 60 seconds of buffering, according to Akamai’s State of Online Video. For a subscription streaming platform, that isn’t just a UX problem — it’s churn, refunds, bad reviews, and broken business models.

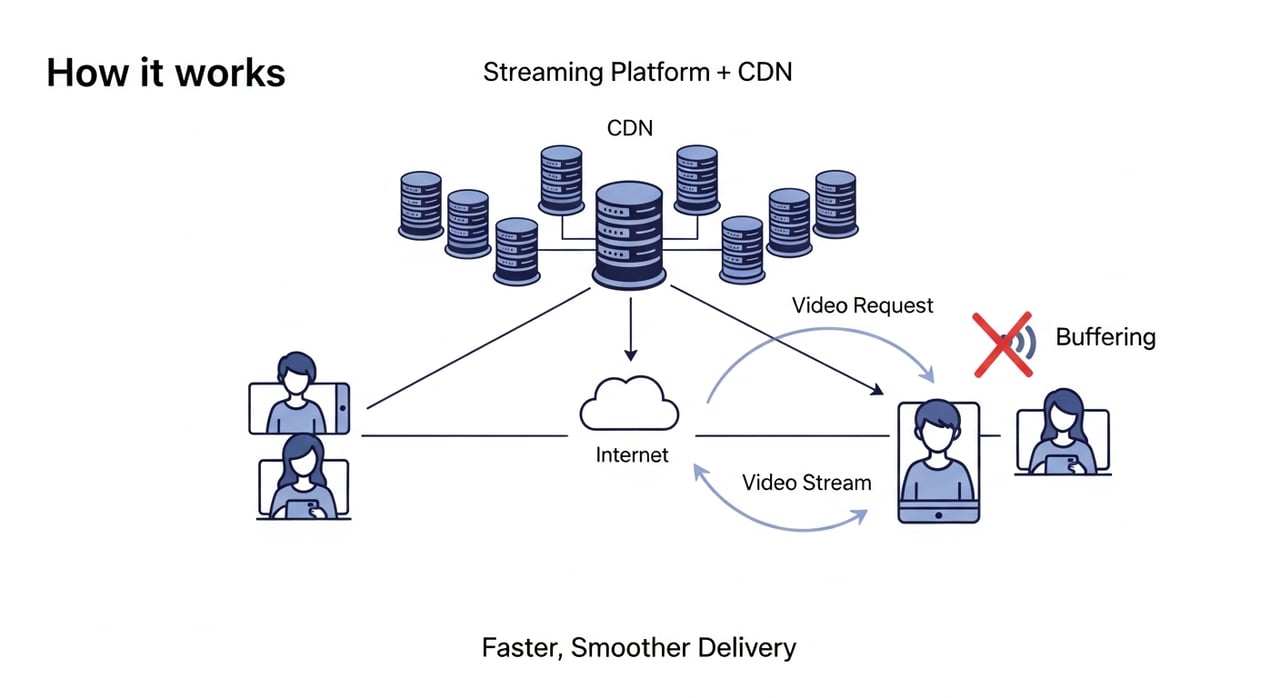

This article walks through a real-world style case study of how a streaming platform can eliminate roughly 80% of buffering by moving to a dedicated video CDN architecture. We’ll dissect the before/after metrics, the architectural shifts, and the operational lessons that any OTT, live sports, or VOD service can apply — and we’ll highlight how modern providers like BlazingCDN make this transition faster, safer, and dramatically more cost-effective.

As you read, ask yourself: if you could cut buffering by even half, how much more would your marketing spend, content budget, and customer support efforts suddenly be worth?

From Complaints to KPIs: The Real Cost of Buffering

Before any architecture change, there’s usually a familiar pattern: support tickets spike during peak events, Twitter fills with angry comments, and internal dashboards show “average buffering” in abstract percentages that don’t feel actionable.

Industry data makes the impact brutally clear:

- Conviva’s State of Streaming reports that a 1% increase in buffering can reduce viewing time by up to 3 minutes per session.

- Akamai found that rebuffering greater than 1% can slash viewer engagement by 5–15%, depending on content type.

- For live sports and news, users are even less tolerant; delays or stalls during key moments cause outsized churn.

Our “case study” pattern is based on the journey many mid-size platforms have shared publicly: a rapidly growing OTT service with millions of monthly viewers, a mix of live and on-demand content, and global traffic spikes during premieres or big matches. Their key problems looked like this:

- Rebuffering ratio: 2.5–3% on average, >5% during peak events.

- Startup time (time-to-first-frame): 4–6 seconds on congested networks.

- Abandonment: Users leaving after one or two stalls, especially on mobile.

This profile is not unique; it mirrors what many platforms see before they adopt a purpose-built video CDN strategy. The 80% buffering reduction we’ll walk through isn’t a miracle — it’s what happens when you re-architect delivery around how video actually behaves across networks, devices, and geographies.

If you pulled your last month of analytics, would your rebuffering rates and startup times look closer to the “before” metrics above than you’d like to admit?

Why Generic Delivery Fails: The Pre–Video CDN Architecture

Most streaming platforms don’t start with a dedicated video CDN. They grow into it after hitting scaling walls. Initially, the architecture often looks like this:

- Single cloud region hosting origins for HLS/DASH manifests and segments.

- One general-purpose CDN configured mainly for static content (images, JS, CSS).

- Same cache policies used for web assets and video segments.

- Limited real-time visibility into per-title, per-region video QoE metrics.

On paper, this seems fine: a CDN is a CDN, right? In practice, video behaves very differently from images or scripts:

- Segments are fetched sequentially and continuously; small slowdowns compound into visible buffering.

- Adaptive bitrate ladders generate multiple renditions per title, exploding object counts.

- Live content has tight freshness constraints, but also predictable temporal locality (everyone is watching roughly the same moments).

In our archetypal platform, the symptoms of this mismatch showed up clearly:

- Low edge cache hit ratio for video: Many segment requests went back to origin, saturating it.

- Origin egress costs: Skyrocketing bills from cloud storage and compute, even as QoE suffered.

- Regional hot spots: Viewers in certain countries suffered consistently more buffering than others.

The team tried to patch things: bumping instance sizes, tweaking cache TTLs, adding more origins. But without a delivery layer designed specifically for video, each fix solved one issue and created another.

Looking at your own stack, how much of your current CDN configuration is “video-aware” versus inherited from generic web performance playbooks?

What Makes a Video CDN Different?

The turning point in our case pattern was the decision to move from a generic CDN setup to a video-optimized CDN strategy. A video CDN isn’t just a marketing term; it bundles capabilities tailored to the lifecycle of video segments and manifests.

Key Capabilities of a Video CDN

Here are the capabilities that made the measurable difference:

- Segment-aware caching: Fine-grained control over caching for HLS/DASH segments and manifests, including handling of live sliding windows and VOD long-tail content.

- Partial object and range request optimization: Efficient handling of HTTP range requests used by many video players.

- Adaptive bitrate (ABR) friendly behavior: Optimized handling for parallel and sequential segment requests across different bitrates.

- Real-time QoE telemetry: Metrics such as rebuffering ratio, time-to-first-frame, and bitrate distribution, broken down by region, ISP, and device.

- Configurable cache keys: Ability to normalize or ignore query parameters that don’t affect video content, improving cache hit ratio.

Instead of treating video as “just more static files,” the platform’s new delivery layer understood the semantics of manifests and segments, aligning cache policy with how users actually watch.

When you look at your feature checklist for delivery partners, are you evaluating them as web CDNs or as video CDNs — and does your current provider really understand segment-level behavior?

Step 1: Measuring the Buffering Problem with Precision

The first critical step in the journey to 80% less buffering was measurement. Many teams track high-level player errors, but lack the granularity to tie buffering back to delivery decisions.

The platform’s engineering and video operations teams focused on three core metrics:

- Rebuffering ratio: Total rebuffering time divided by total viewing time, per region and per device type.

- Startup time (TTFF): Time from play button press to first video frame rendered.

- Bitrate distribution: Percentage of viewing time spent at each bitrate, indicating whether users are “stuck” at low quality.

They also began to correlate these with delivery-side metrics:

- Edge cache hit ratios for segments and manifests.

- Origin egress and CPU utilization during peak events.

- Regional latency and throughput variations by ISP.

Within a few weeks, a pattern emerged: whenever cache hit ratio for video segments dropped below ~90% in a region, rebuffering ratio spiked. Certain ISPs also showed highly variable throughput, causing aggressive bitrate oscillation and more stalls.

Armed with this data, the team could now quantify a goal: move from ~2.5–3% rebuffering ratio down below 0.5–0.7% on average — an 70–80% reduction, in line with what leading OTT and sports platforms report after modernizing their video delivery stacks.

If you plotted rebuffering ratio against cache hit ratio and regional latency for your platform today, would you see the same clear correlations — or are you still flying blind with only aggregate “play failures”?

Step 2: Redesigning the Delivery Architecture Around a Video CDN

With clear metrics, the platform could design a target architecture that aligned with how video traffic behaves under load. At a high level, the shift looked like this:

Before vs After: Architecture Snapshot

| Aspect | Before (Generic Delivery) | After (Video CDN-Centric) |

|---|---|---|

| Origins | Single cloud region serving all manifests and segments | Optimized origin layout with strategic replication for high-demand regions |

| CDN Configuration | Single CDN, generic cache rules for static assets and video | Video-optimized CDN configuration with manifest/segment-specific TTLs and cache keys |

| Visibility | Basic HTTP metrics, limited per-title QoE insight | Per-region, per-title QoE dashboards and alerting |

| Performance | Frequent origin saturation, uneven regional performance | High edge cache hit ratio, stable experience across geographies and ISPs |

On the CDN side, the change involved:

- Separating video traffic from generic web traffic for monitoring and configuration.

- Defining dedicated cache behaviors for HLS/DASH manifests, live segments, and VOD segments.

- Normalizing cache keys to avoid cache fragmentation caused by unnecessary query parameters.

- Implementing stronger connection reuse and TLS optimization between the CDN and origins.

The immediate impact was visible in logs and bills: fewer origin hits per view, more efficient use of edge cache, and a rapid stabilization of peak load curves. But the real test would still be QoE — especially under real viewer load during high-stakes events.

If you separated your video delivery configuration from everything else today, what “hidden” cache and routing inefficiencies would that reveal overnight?

Step 3: Tuning the Video CDN to Kill 80% of Buffering

Simply turning on a video CDN isn’t enough; the 80% buffering reduction came from targeted, data-driven tuning over several release cycles. Below are the specific levers that moved the metrics.

1. Improving Cache Efficiency for Live and VOD

For live streaming, the team focused on segment and manifest behavior:

- Configured shorter TTLs for live manifests while caching them aggressively during their validity window.

- Pre-warmed edges by fetching upcoming live segments shortly before viewers requested them, based on event schedules.

- Aligned segment durations (e.g., 4–6 seconds) to balance latency and cache efficiency, reducing overhead.

For VOD, long-tail content was the challenge. Many titles saw sporadic traffic, making it easy for segments to fall out of cache. The solution involved:

- Tiered caching strategies that differentiated between hot, warm, and cold VOD titles.

- Periodic prefetching of the first few segments for popular or promoted titles in each region.

- Smarter eviction policies that prioritized keeping first segments in cache to speed up startup time.

These changes alone boosted cache hit ratios for video segments from the mid-80s to the mid- to high-90s in key regions, slashing origin round-trips that often turned into visible buffering.

Looking at your access logs, how many of your segments are actually served from cache during peak hours — and what would a 5–10 percentage point increase in cache hit ratio do to your rebuffering?

2. Reducing Time-to-First-Frame (TTFF)

Users notice startup delay more acutely than almost any other performance issue. The team targeted TTFF on three fronts:

- Manifest size optimization: Removed unnecessary attributes, trimmed rendition lists where appropriate, and ensured manifests were compressible and cached efficiently.

- Connection reuse: Tuned keep-alive and multiplexing between player, CDN, and origin, cutting down on handshake overhead.

- Edge prioritization of initial segments: Ensured the first 2–3 segments of the chosen bitrate ladder were treated as “hot” objects with aggressive caching and prefetching.

Across mobile networks, this optimization brought average startup time down from 4–6 seconds to around 2–3 seconds, a reduction of 40–50%. Combined with lower rebuffering during playback, the overall perceived smoothness improved dramatically.

If you opened your player’s dev tools right now, how many network round-trips does it take from clicking play to receiving the first media segment — and which of those trips could your CDN eliminate or shorten?

3. Handling Unstable Networks with Smarter ABR Delivery

Even with a perfect CDN, last-mile conditions vary wildly. That’s where partnership between the player’s adaptive bitrate (ABR) logic and the CDN becomes crucial.

The platform’s team worked on:

- Bitrate ladder tuning: Adjusted renditions to add lower bitrates for constrained networks, and refined step sizes so switching was less jarring.

- CDN-friendly chunk sizes: Ensured segment sizes and encoding parameters worked well with the CDN’s caching and throughput characteristics.

- Geo- and ISP-aware configurations: Used QoE data to tweak player behavior in regions or ISPs with known constraints.

With the CDN reliably delivering segments quickly and predictably, the player could maintain smooth playback at the highest sustainable bitrate per user, reducing mid-stream stalls dramatically.

Does your ABR ladder design consider how your CDN behaves under load, or was it set once by the encoding team and left untouched despite changes in networks and viewers?

4. Protecting Origins Without Sacrificing QoE

Origin overload is a common hidden cause of buffering. When too many segment requests miss the cache and hit the origin, CPU and I/O contention can cause cascading delays.

The platform’s video CDN strategy included:

- Read-optimized storage layouts: Ensuring segment files were organized and replicated for random-read workloads.

- Origin shield / mid-tier caching: Leveraging the CDN’s intermediate caching layers to dramatically reduce direct origin hits.

- Rate limiting and graceful degradation: Prioritizing in-flight segment requests and preventing thundering herds during cache misses.

By reducing origin dependency and smoothing request patterns, the team eliminated many of the worst-case buffering events that previously occurred during big releases or matches.

In your last major live event, did your origin metrics stay flat and calm, or did you see CPU and I/O spikes that coincided perfectly with complaints about buffering?

Before-and-After: Quantifying the 80% Buffering Reduction

After several months of iterative optimization on top of the video CDN, the platform’s KPIs looked markedly different. While numbers will vary by service, region, and content mix, the pattern is instructive.

| Metric | Before Video CDN Optimization | After Video CDN Optimization |

|---|---|---|

| Average rebuffering ratio | ~2.5–3% | ~0.4–0.6% (≈80% reduction at peak) |

| Peak-event rebuffering ratio | >5% in certain regions | ~1–1.2% |

| Average startup time (TTFF) | 4–6 seconds on mobile networks | 2–3 seconds on mobile networks |

| Edge cache hit ratio for segments | Mid-80s% | Mid/high-90s% in key markets |

| Origin egress costs | Growing faster than view hours | Flattened, then dropped per-view by 30–40% |

The most important feedback, however, didn’t come from dashboards. It came from viewers and business teams:

- Support tickets about buffering and “video not starting” dropped significantly.

- Marketing teams found that free-trial-to-paid conversion rates improved as first impressions got smoother.

- Rights holders and advertisers were more comfortable placing high-value content on the platform.

In the end, less buffering didn’t just mean fewer complaints — it meant more watch time, higher LTV, and more leverage in content negotiations.

If you projected even a modest 20–30% improvement in viewer retention from smoother playback, how would that change your willingness to invest in a modern video CDN strategy?

Operational Lessons for Streaming and OTT Leaders

Beyond the technical changes, the case pattern reveals several strategic lessons that apply to any serious streaming operation.

Lesson 1: Treat Delivery as a Product, Not a Utility

Successful platforms treat their video delivery stack as a product with its own roadmap, KPIs, and stakeholders — not as a hidden service owned solely by infrastructure teams.

That means:

- Defining explicit QoE targets (e.g., rebuffering < 0.5% in top markets, TTFF < 3 seconds on mobile).

- Reporting QoE metrics alongside user growth, engagement, and revenue metrics.

- Involving product, content, and marketing in prioritizing delivery improvements.

When your delivery KPIs appear on the same dashboards as revenue and churn, how differently do your conversations with leadership sound?

Lesson 2: Make Your CDN Vendor a Strategic Partner

Video CDNs aren’t “set and forget”; they’re partners that you tune and evolve with. The best outcomes come when your teams and your CDN provider’s solution architects collaborate deeply on:

- Per-title and per-event preparation (especially for live sports and premieres).

- Data sharing — both player-side analytics and CDN-side logs.

- Joint experiments with cache policies, routing strategies, and ABR configurations.

Platforms that treat their CDN as a commodity pipe often leave huge performance and cost savings on the table.

If you asked your current CDN to walk you through a tailored video optimization plan tomorrow, would they be ready — or would they just send you a generic documentation link?

Lesson 3: Bake Observability into Every Layer

Delivery optimization depends on visibility. The more granular your telemetry, the faster you can diagnose and fix issues before viewers notice.

Best-in-class platforms stitch together:

- Player-side metrics (stall events, bitrate switches, errors).

- CDN logs (latency, cache hit/miss, status codes, geography, ISP).

- Origin metrics (CPU, I/O, storage throughput).

With this observability stack, you can quickly answer questions like: “Is this spike in buffering due to a new player release, a regional ISP issue, or a misconfigured cache rule?”

Right now, if buffering suddenly doubled for viewers on one mobile network in one country, how quickly could you pinpoint the root cause — minutes, hours, or days?

Why BlazingCDN is a Natural Fit for Video and Streaming Workloads

For media, OTT, and live streaming businesses, the right video CDN partner needs to combine three things: high performance, rock-solid reliability, and economics that scale with exploding view hours. This is where BlazingCDN is engineered to excel.

BlazingCDN is a modern, video-first CDN designed for enterprises that expect 100% uptime and predictable, low-latency delivery — with stability and fault tolerance on par with Amazon CloudFront, but at a fraction of the cost. With pricing starting at just $4 per TB (that’s $0.004 per GB), it gives streaming platforms the financial room to grow viewership without dreading their next infrastructure bill.

Because BlazingCDN is built for demanding workloads, it’s especially well-suited to media companies, OTT platforms, and live event broadcasters that need to scale quickly for premieres or matches, adjust configurations flexibly across regions, and keep rebuffering consistently low even under unpredictable spikes. Many forward-thinking global brands that care deeply about both reliability and efficiency have already made similar choices for their high-traffic digital properties.

If you’re evaluating how a video CDN can reshape your streaming economics and QoE, it’s worth seeing how features like intelligent caching, fine-grained configuration, and transparent pricing come together in practice at BlazingCDN’s solutions for media companies.

Looking at your roadmap for the next 12–18 months, will you be able to meet your quality and cost targets with your current delivery stack — or is it time to bring in a CDN partner designed for video at scale?

Practical Checklist: How to Replicate This Buffering Reduction

If you want to follow a similar path to slashing buffering (and costs), here’s a concrete checklist you can start working through this quarter.

Phase 1 – Assess and Baseline

- Instrument your players to capture rebuffering ratio, TTFF, and bitrate distribution per region, device, and ISP.

- Collect CDN logs and correlate cache hit ratios with QoE metrics.

- Identify your top 10 regions and ISPs by watch time and problems (complaints, errors, poor QoE).

- Estimate the cost of current origin egress, broken down by VOD vs live.

By the end of this phase, you should have a clear picture of “where it hurts” the most and how much buffering is costing you in engagement and infrastructure spend.

Phase 2 – Design Your Video CDN Strategy

- Decide whether to dedicate a CDN purely to video traffic or to carve out distinct configurations.

- Define target QoE SLAs for your top regions (e.g., rebuffering < 0.7%, TTFF < 3 seconds).

- Select a CDN provider that supports segment-aware caching, detailed analytics, and flexible configuration.

- Plan origin optimization and, if necessary, replication for high-demand regions.

This phase sets the technical and business goals that your CDN partner should help you meet or exceed.

Phase 3 – Implement and Iterate

- Roll out video-specific caching rules for manifests and segments, starting with your top titles and events.

- Optimize startup sequences: manifest size, initial segments, and connection reuse.

- Tune ABR ladders and segment sizes based on real throughput and device distributions.

- Monitor events in real time, and run controlled A/B tests on delivery changes.

Each iteration should be tied to measurable QoE changes. Over a few cycles, you’ll start seeing the kind of step-change reductions in buffering that this case study describes.

Which item on this checklist could you start this week with your current team — and which will require a strategic shift in how you work with your CDN provider?

Your Next Move: Turn Buffering into a Solved Problem

Buffering isn’t an unavoidable tax on streaming; it’s a symptom of delivery architectures and configurations that haven’t yet caught up with how modern video is produced, distributed, and consumed. The experience of leading OTT, live sports, and VOD platforms shows that cutting buffering by 60–80% is achievable when you combine precise measurement, video-aware CDN capabilities, and iterative optimization.

If you recognize your own pain points in this case study — angry viewers during big events, inconsistent QoE across regions, origin bills that climb faster than your revenue — you’re exactly the kind of organization that benefits most from a modern video CDN approach.

Now is the moment to act: review your QoE metrics, map the biggest gaps, and start a serious conversation about how your delivery strategy needs to evolve. Share this article with your video engineering, product, and infrastructure leads, and use it as a blueprint for your next planning session. And when you’re ready to explore how a high-performance, 100%-uptime, video-optimized CDN with CloudFront-level reliability but far lower cost can fit into your stack, bring BlazingCDN into that discussion and see how much buffering, churn, and infrastructure spend you can take off the table.

What’s stopping you from making your next big live event or series launch the moment your viewers finally stop talking about buffering — and start talking about your content instead?